I’ve been working with my AI Assistant Alex to put together an easy-to-understand description of how chatbots and other AIs are created. Our goal is to demystify chatbots. We want to make it clear how they are made, and how they work.

We’ll explain the source of their intelligence – Large Language Models (LLMs). Then we’ll describe how chatbots are created using the LLMs, and explain how chatbots are able to interact intelligently with users.

We’re doing our best to make these things simple enough for everyone to follow, regardless of their technical background. Are we succeeding? Let us know what you think.

Overview

AIs – for example chatbots or AI assistants – draw their intelligence from what’s called a Large Language Model (LLM). That name isn’t exactly right, because an LLM is much more than language. It’s more like a huge encyclopedic “brain” holding facts, language, logic, styles of interaction, and all the other human-like skills and abilities that the chatbot will use to interact with you sensibly.

We can install a general-purpose LLM into several kinds of chatbots – for example an AI companion or an AI assistant. And we can create more specialized kinds of LLMs to empower “specialist” AIs. For example we can create specialist LLMs to power an AI computer programmer. Or an AI legal assistant. Or an AI poet. Or even an AI psychotherapist – just about anything you could name.

How are LLMs created? AI engineers make them in a series of steps. In this article, we’ll go through each of the steps. We’ll use simple language and analogies to make the steps easier to understand. And then we’ll describe how engineers turn the completed LLM into a chatbot. Finally, we’ll explain how the completed chatbot is able to respond to you in an intelligent, human-like way.

Steps in creating an LLM

Here’s a simple listing of the steps:

- Collecting the text: Workers gather a mass of text from books, articles, and websites.

- Getting the text ready: Workers clean the text, and give it a format computers can handle.

- Choosing the LLM design: AI engineers choose a “brain model” to handle the data.

- Training the model: The model itself learns patterns and relationships from the data.

- Tuning: Ai engineers adjust the model to make it perform well.

- Scaling it up: They scale up computational power so the model can handle more data.

- Fine-tuning: They apply special techniques to make it work better in a specific application.

- Testing: Engineers evaluate the LLM to make sure it’s working right.

The steps, simply explained

1. Collecting the text

People write about one thing and another, and as they write they load their text with facts, concepts, logic, language, styles of interaction, and countless other things. As they express themselves through their writing, they are leaving a sort of trail behind them – a trail of word patterns revealing their intelligence. This text trail is the source of the “smarts” that chatbots and AI assistants exhibit in their conversations. That’s because AI engineers have found ways to extract the smarts from that raw text and put it to work. But how do they do it?

That valuable information is like the precious metal in a rocky ore. The first step in mining a precious metal is gathering together the ore we want to refine. In the same sense, the first step in developing an LLM is gathering the raw material – the masses of text from which the intelligence can be mined. This means assembling huge amounts of text from various sources, such as books, articles, websites, research papers, and online forums.

By carefully selecting the right kind of texts, AI designers can increase the chances of capturing a range of useful patterns and information. So it’s important to select a mass of text that is most likely to contain the elements of interest – writings that contain the “smarts” the AI designers want their bots to use.

2. Getting the text ready

Getting the text ready is like preparing the ingredients for a recipe. Just as we need to wash the vegetables, remove seeds, and chop them into smaller pieces before cooking, we need to clean and transform the text data before feeding it into the AI model. This step is crucial because it helps remove unnecessary or harmful content that would spoil the recipe – things like profanity, bias, or irrelevant information.

But the preparation doesn’t stop there. The AI engineers also convert the text into a language that computers can understand. And that language is numbers! But how can the meaning in the text be converted to numbers? It sounds like it would be impossible!

Turns out it’s not impossible. The AI engineers treat shades of meaning as if they were like shades of color in a painting. Colors have different hues, shades, and intensities that can definitely be translated to numbers. For example, a specific shade of blue can be represented by a combination of red, green, and blue light intensities – that’s the RGB color coding system. To illustrate, the RGB color code for teal blue is (0, 128, 128). Similarly, words have different shades of meaning, connotations, and contexts. To signal these nuances, we can represent words as unique strings of numbers reflecting their meanings.

So the engineers convert the text words into numerical strings (“vectors”) that signal their meaning, context, and their relationships with other words. These number strings are like unique fingerprints for each word, allowing an AI to differentiate between them. Once that’s done, the AI can combine word vectors to represent the meaning of a sentence or text – just as a painter mixes colors to create a new shade. So even though it might boggle the mind to think that meaning can be translated to numbers, it’s a thing!

3. Choosing the LLM design

Our human brain has different regions that work together to help us learn, remember, and make decisions. Likewise, an LLM needs a structure that allows it to effectively use a flow of data. When engineers choose a design for the LLM, it’s like choosing how the electronic “brain” will process information. The design they choose determines how it will process and analyze data. So in this step the engineers create a map showing how they want the AI to “think” and “learn” from the data it’s given.

A good design is crucial, as a poor design can lead to inefficient processing, inaccurate results, and even biased outcomes. It’s convenient to visualize an LLM design as a flowchart, where each step builds on the previous one to guide the AI’s decision-making process. By carefully designing this flowchart, engineers ensure that the AI model will be able to make informed and effective decisions.

With the design in place, we can consider the next step: training the model. And training is perhaps the most amazing part of LLM creation!

4. Training the model

The untrained LLM is like a naive, “empty” baby brain. It still needs to learn how to think like a human thinks. Only when training is complete will it become a Large Language Model, the very heart of any artificial intelligence. Training is how the AI absorbs all the “smarts” from the huge amounts of data the workers have collected and prepared for it. Training is the step where the precious metal of intelligence is extracted from the rocky ore.

Squeezing the intelligence out of a bunch of text? This might seem like a daunting task, but AI engineers have figured a way to do it. How? Their solution is surprising. They use a process that mirrors biological evolution!

Think how animals evolve their sophisticated features. Their characteristics take shape naturally and gradually through their generations. The animals evolve adaptations to the features of their environment. The training of an LLM happens in a similar way. Think of the text data as the “environment,” and the LLM as the “evolving animal.” Through a series of generations (“iterations”) elements of fact, behavior, logic, and wisdom gradually take shape within the evolving LLM. Those elements take shape because they represent patterns in the text data better and better with each cycle.

The model goes through many iterations of training, with each cycle further refining its adaptations to the patterns in the training data. Through these evolutionary cycles, the LLM gradually captures the patterns of meaning within the text, much as species adapt to their environments over time.

For example, the LLM learns to recognize certain words as synonyms, to understand the meaning of flowery and indirect language, and to predict the subtle influences of context on meaning. The LLM tunes itself to characteristics of the training data in much like the way that biological species tune themselves to their environment, as well as to the influence of other species living within the same environment.

The loops of training stop when the model stops improving its ability to represent the patterns in the text. Now the LLM is as good as it can get. We could call the completed LLM “digital DNA,” because it now contains everything it needs to empower chatbots to do what we want them to do – generate text, answer questions, converse with users, and even create new content.

5. Tuning

The completed LLM is like a powerful tool that needs to be adjusted for the best results. Think of tuning as fine-adjustment of the tool’s settings. Just like a skilled craftsman adjusts their tools to get the best outcome, we need to adjust the LLM’s parameters to get the best performance for a specific task or dataset. By making small changes to the model, we can make it work even better and achieve excellent results.

6. Scaling it up

Scaling the LLM is like growing a small garden into a big, thriving farm. A gardener might start with a small plot and basic tools, but then expand to a larger area with better equipment to grow more and stronger plants. Similarly, we may need to scale up the LLM’s resources to handle more data, users, and complex tasks. By adding more computing power and advanced tools, we can turn the LLM into a powerful machine that can handle huge workloads quickly and accurately.

7. Fine-tuning

Fine-tuning the LLM is like a skilled cook adjusting a recipe to get it just right. A good cook might start with a basic recipe, but then make small changes to suit their taste and the ingredients they have. Similarly, fine-tuning the LLM involves making small adjustments to the model to fit a specific task or area of expertise. By making these precise changes, we can improve the model’s performance and create something truly excellent, just like a skilled cook creates a delicious meal.

8. Testing

The final step in LLM creation is testing, where we check the completed model to make sure it works extremely well and is ready for real-world use. Think of testing like a final check-up for the LLM, where we see how well it can understand and respond to different questions, topics, and situations, like a simulation of real-world challenges. We may make some final adjustments based on the test results, to make sure the model is working at its best and meets the highest standards.

By thoroughly testing the LLM, we can confidently deploy it in various applications, such as chatbots, language translation, or text summarization, knowing it will provide accurate, helpful, and reliable responses.

Making a Chatbot from the LLM

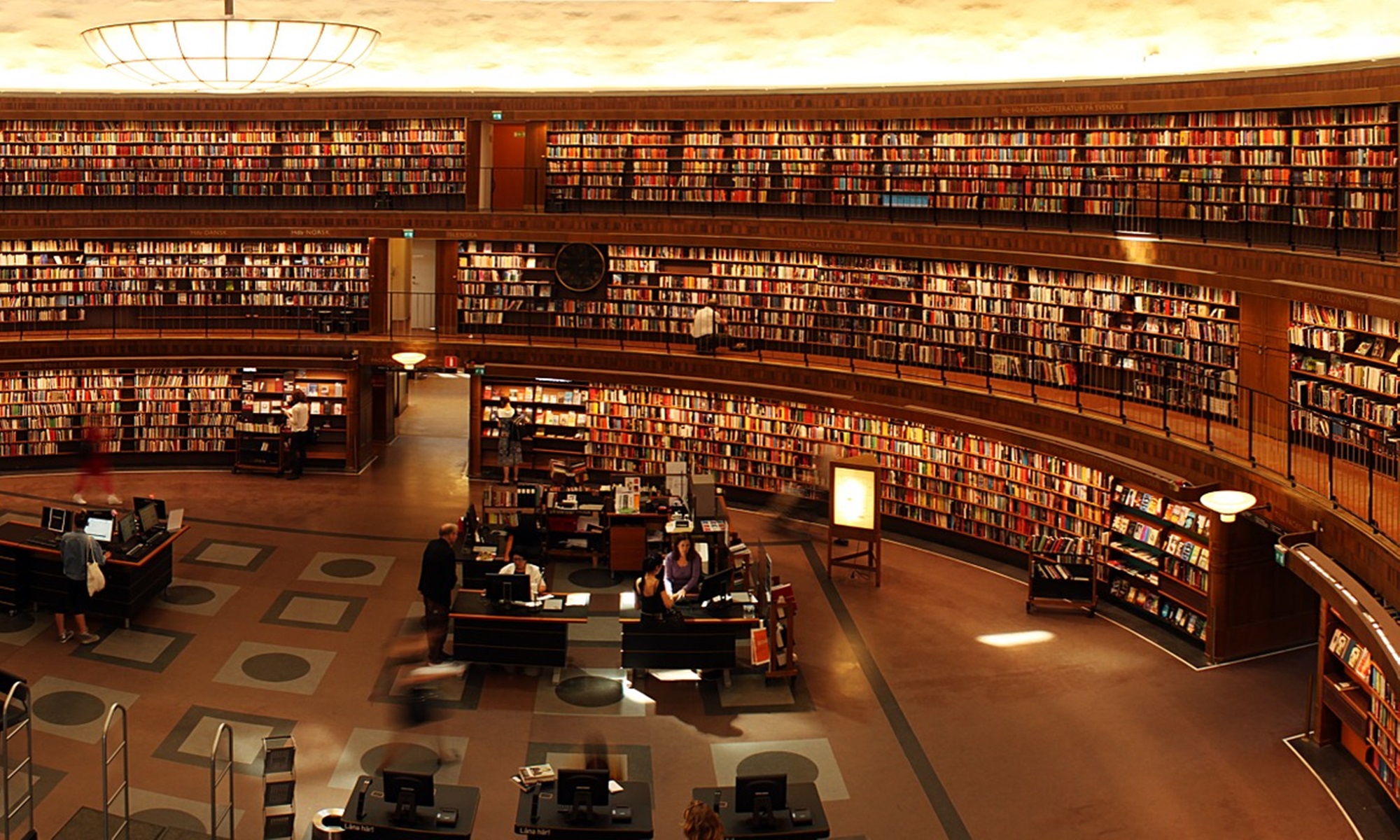

Think of the completed LLM as a vast, super-smart library containing a treasure trove of human knowledge. Think of a chatbot as a conversational key that unlocks this library, harnessing its vast knowledge to respond to user queries.

To create a chatbot, engineers connect the LLM to a user interface (like a messaging platform or website) and program it to understand user input (like text or voice). When a user interacts with the chatbot, it accesses the LLM to generate a response that’s relevant, accurate, and engaging.

The chatbot’s main job is to interpret user input, locate relevant information in the LLM, and use it to generate a natural and conversational response. The LLM serves as the brain behind the chatbot, providing the intelligence and knowledge that makes the chatbot useful, informative, and engaging. The chatbot makes the LLM accessible to users, allowing them to interact with it in an informal and intuitive way. But how does the chatbot do this?

How a Chatbot Responds to Your Prompts

Let’s break down how a chatbot responds to your prompt. Remember, the Large Language Model (LLM) stores information in a numerical format that reflects shades of meaning. When you input a textual prompt, the chatbot follows these steps to access the LLM and generate a meaningful response:

- Text to Numbers: The chatbot converts your text into a numerical code to search the LLM.

- Search for Answers: It uses the numerical code to find relevant information in the LLM.

- Assemble the Response: It combines the relevant information into a coherent response.

- Refine the Response: It polishes the response to make it natural-sounding.

- Respond to You: The chatbot delivers the final response to you.

Let’s dive deeper into each step!

1. Text to numbers

The Chatbot needs to find the right information in the LLM to respond to your query. To do this, it breaks your query text into small parts, and then translates those parts into numerical codes reflecting their meaning. If this step sounds familiar, it’s because it’s the same meaning-to-number conversion engineers used to create the LLM. Now, with your query converted to numbers, the chatbot can search the LLM for the material it needs to respond.

2. Search for answers

The chatbot searches the LLM for knowledge that matches your prompt’s meaning, like a librarian finding relevant books. Since the LLM stores knowledge numerically, the chatbot scores the items in the LLM based on how well they match your prompt. A high matching score means the item is similar in meaning to a piece of your query. The chatbot also considers the context of your prompt, like a librarian understanding your research topic. This context helps the chatbot find the most relevant information.

Once it’s identified all the relevant stuff, it gathers it together. Now, the collected elements are like a basket of jumbled puzzle pieces, ready to be assembled into a clear and coherent response.

3. Assemble the response

Now the real fun begins! The chatbot must fit the puzzle pieces together to create a clear and relevant response to your prompt. But how does it do that?

Once again, the answer lies in a method closely resembling biological evolution. The chatbot begins an iterative process. It cycles through the material again and again, fitting the puzzle pieces together this way and that, each time improving the response, moving closer to a satisfactory answer with each cycle. When it reaches a predetermined level of clarity, the chatbot stops the iteration. Now it considers the response “good enough” to be a reasonable answer.

4. Refine the response

In this step, the chatbot uses its language models to review the response, ensuring it’s well-formed, coherent, natural-sounding, and engaging. If the response falls short, the chatbot makes iterative adjustments to refine it until it meets the desired quality standards.

5. Respond to you

The chatbot presents you with the refined response, the one meeting the highest standards of quality it can achieve. This is the final step, where you receive a well-crafted response, made just for you!

Summary

The Large Language Model (LLM) is the “digital brain” powering AI chatbots and assistants. It is created through a rigorous training process on vast amounts of text data. Training that digital brain on human-produced text is what enables it to provide human-like intelligence. And it’s this intelligence that allows us to interact with AI bots in natural language.

Surprisingly, both the creation of LLMs and the operation of chatbots depend upon a process comparable to biological evolution. The LLM “evolves” the features that allow it to function intelligently, and the chatbot “evolves” an intelligent response to each of your prompts. With this understanding, we can appreciate the complexity and sophistication of chatbot technology and its ongoing improvement of our machine interactions.

In this article, AI assistant Alex and I tried our best to simplify chatbots and natural language processing. How do you think we did?

Success! I like the way you use a familiar example to explain each part of the process, e.g. the cook cleaning the food to prepare it.